Data, Information and Knowledge

Data: A collection/string of text, numbers, symbols, images or sound in raw or unorganized form that has no meaning on its own.Information: Data that has been processed and given context and meaning and can be understood on its own.

Information is much more refined data. Data is given context by identifying what sort of data it is.

For example :- PC is data without any context. After identifying that PC stands for Pranav Chugh we can classify it as information.

Data sources

A direct data source (primary source) is data that has been collected first hand, usually by the person who intends to use that data.| Advantages:- | Disadvantages:- | Collected data will be relevant to the purpose. |

It can be expensive to collect since companies might have to be hired to collect them |

|---|---|

| Original source is verified. | Will take a long time to collect |

| Data can be sold for other purposes | Taking large data samples is difficult |

| Data will be Up to date | May involve having to purchase printers, loggers, computers etc |

| It can be presented in the required format |

By the time the project is completed, data might have gone out of date |

| It is likely to be unbiased |

It has often been collected by a different person or organization.

| Advantages:- | Disadvantages:- | Data is immediately available, and large samples for statistical analysis are more likely to be available |

Original source is not verified |

|---|---|

| Quick | Data may not be up to date. |

| Cheap | Data is likely to be biased due to unverified sources |

| Sorting and filtering might be required to get rid of additional or irrelevant data. |

Static information source:- Static data is data that does not change regularly. It can be viewed offline and has a high

chance of being accurate since the information would have been validated before entering.

Tends to go out of date quickly. As soon as it is created it is difficult to add information to it.

Dynamic information source:- Dynamic data is data that is updated once the source data changes. Information is most likely up to date.

There can be many contributors to the data.

Direct data sources:-

Questionnaires- Often collected from individuals, can be hard copy or online.

Most questions are close ended. They make it easy to analyse and interpret the results as all questions are the same.

Large amounts of data can be collected, although many times the individuals

would fill the form not honestly due to social desirability or for an incentive that is provided at the end of the form.

Interviews- This form of data collection allows the interviewer to collect more data as responses can be collected in depth (questions are usually open ended)

This form can be costly to conduct and takes time to collect a large amount of data. It can be expensive and the interviewee

might answer dishonestly or lie to act socially desirable.

Quality of Information

Accuracy- Data must be accurate in order to be considered of good quality. Typos, spelling mistakes, misplaced characters

are all examples of inaccurate data.

Relevance: Information must be relevant to its purpose. Data must meet the requirement of the user.

Being given the rental price of a car when you want to buy one is an example of irrelevant data.

Age: Information must be up to date. Old information is likely to be out of date

and therefore no longer useful.

Level of detail: There needs to be just the right amount of information for it to be good quality.

Everything must be specified.

Completeness: All information should be present. Not having information means it cannot be used properly.

Encryption

Encryption is the process of scrambling data so that it cannot be understood without a decryption key.This makes it unreadable if intercepted. Data can be encrypted when stored on disks or even when sent across a network.

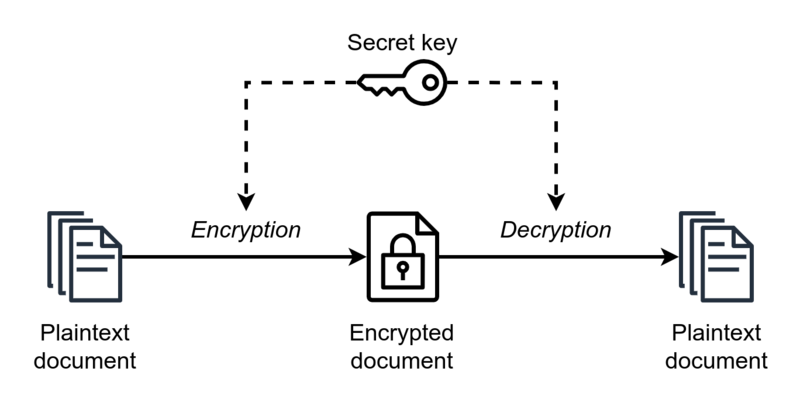

Symmetric encryption: This is the oldest method of encryption. requires both the sender and receiver to possess the secret encryption and decryption key(s).

Requires the secret key to sent to the recipient. Sender encrypts message using secret key. Same key is used to decrypt the message by the recipient.

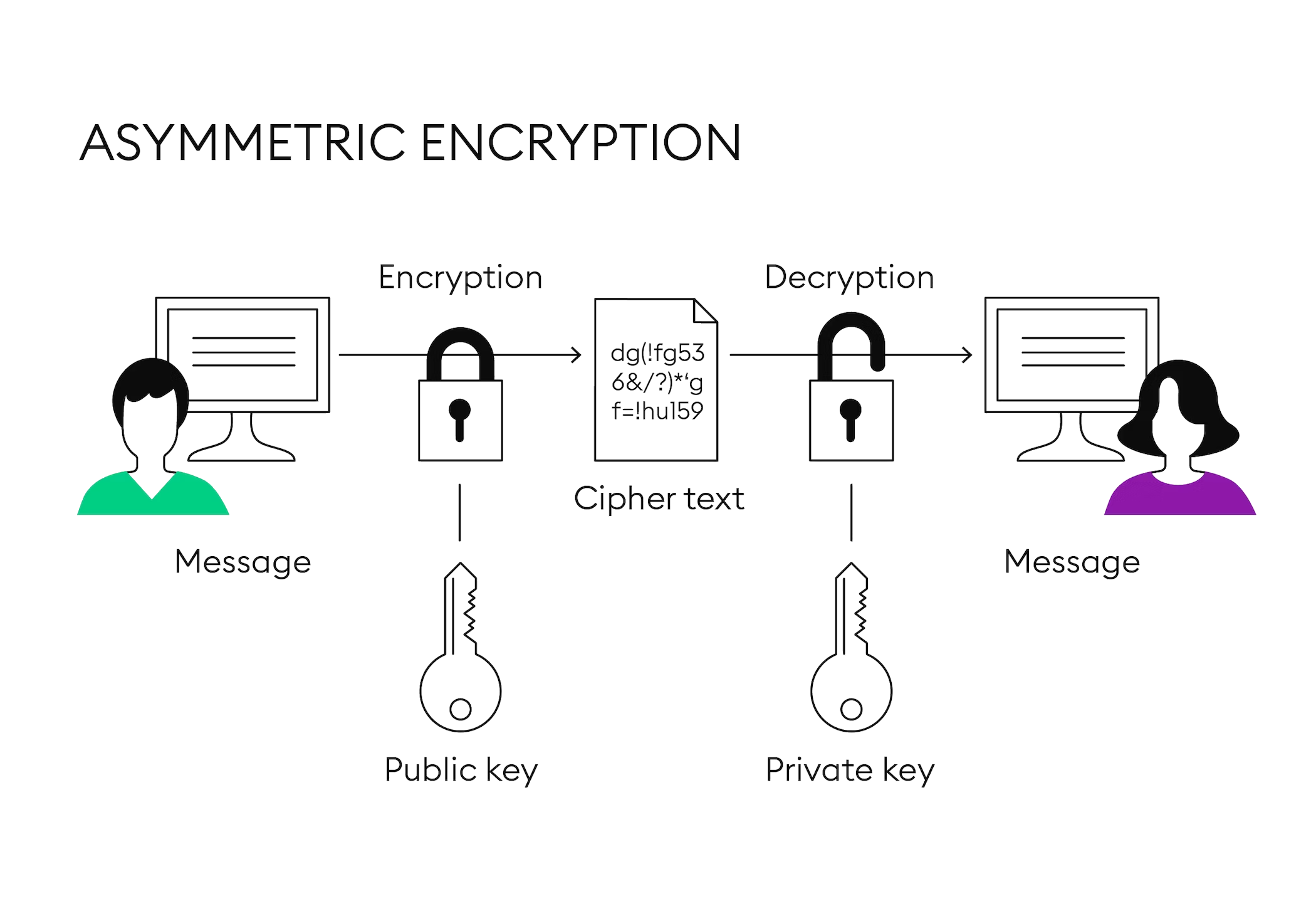

Asymmetric encryption: This overcomes the issue with symmetric encryption where there is a chance of the secret key being intercepted as well.

This method includes a public key which is available to everyone and a private key which is known only to the recipient.

The sender encrypts data using the public key and the recipient decrypts it using their matching private key.

*Requirements for a public key

*Requirements for a public key

- Organisation name

- Organisation that issued the certificate (verisign)

- Users email address

- Users country

- Users public key

it will check the digital certificate to ensure that the site is trusted.

Validation

The process of checking if data matches acceptable rules.Presence check: This check ensures that data is entered into a field and not left blank.

Range check: Ensures that data is within a defined range. It includes 2 boundaries. An upper and lower boundary.

Type check: Ensures that the data must be of defined data type. For ex- if age is entered, must be an integer.

Length check: Checks the length of the number of characters in a field, unlike range check which checks

if the value falls in a certain range. For ex- password must be 6 characters long.

Format check: Ensures data matches a defined format

Lookup check: rests to see if data exists in a list. Similar to referential integrity.

Consistency check: compares data in one field with data in another field that already exists within a record,

to check their consistency. For ex- if gender is Male, user must be referred to as Mr.

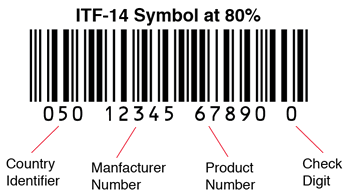

Check digit: Number or letter added to end of the field. It is the sum of an algorithm that takes place

on the digits before it. For ex 192937. The final digit 7 is a result of the 2nd digit – the 5th digit + the 1st digit. 9-3+1=7

Verification

Verification means to check that the data on the original source document is identical to the data you have entered in the system.Visual check: The process of visually, manually looking at the data to ensure that it is correct and accurate.

Double data entry: Have 2 people enter the same data and compare it OR have one person enter the same data twice

and compare it to one another.

Parity check: A parity bit is a bit that has been added to a block of data to detect any errors. The bit can be either 0 or 1.

In even parity check, if the total number of 1bits are even then the parity bit must remain 0 to keep the sum even.

For odd parity, if the number of 1bits are even then the parity bit must change to 1 such that the sum of the bits are odd.

Checksum: A checksum is a value used to verify the integrity of the data. It can be calculated using many different algorithms,

for ex a simple checksum can be the number of bytes in a file. When a checksum value does not match the source data after transmission

we can conclude that it was a result of an interruption in the internet, corrupted disks, third party interfering with the data etc.

Hash total: A hash total is the numerical sum of one or more fields in the file. When needed,

the hash total is recalculated and compared with the original. If data is lost or mismatched,

we can conclude the errors in transmission.

Control total: A control total is very similar to a hash total but is only carried out on numerical fields.

Data processing

Batch processing: Batch processing is a way to process large volumes of data. A group of transactions are collected over time.They are collected, entered and processed after which the results are produced.

Master and transaction file: In such a system, the main data is stored in the ‘master’ file. The data is sorted using a primary key.

All the data that has to be updated in the master file is known as a transaction. The input data is stored in the transaction file before

being used to update the master file.

3 main types of transactions are

Adding new record

Deleting a record

Updating an existing record

| Advantages:- | Disadvantages:- | Single process which requires minimal human participation | Delay as data is not processed until a specific time |

|---|---|

| Can be scheduled to occur when there is little demand for computer resources |

Errors cannot be identified and corrected until the data is processed |

| Reduces chance for human error | |

| Fewer repetitive tasks for operator |

Its applications are when the master file must be kept up to date. For ex a travel agency selling tickets for their tour.

Once the computer starts processing the transactions it will not process any other data until the current transaction is completed.

Real time processing Real time systems are required to process information rapidly. Most real time systems process data at an instant.

An application for this is in the use of a missile guidance system. Once a missile has been launched,

the system must control its variables such as fins, speed instantly. Due to the high velocity of the missile

the inputs must be processed very quickly to affect the output. Most real time systems are control systems.